Generative AI and LLMs in Clinical and Laboratory Medicine

Generative AI and LLMs

in Clinical and Laboratory Medicine

- What is Generative AI and how is it transforming medicine?

- Why focus on Large Language Models (LLMs)?

- Key goals of the course:

- 🧠 Understand the fundamentals of generative AI and LLMs

- 🧪 Explore applications in clinical and lab settings

- 🔄 Compare local vs commercial tools (ChatGPT, Claude, Mistral, etc.)

- 🛠 Practice with LM Studio, WebLLM

- 🧠 Understand the fundamentals of generative AI and LLMs

- Course format: 2h theory + 2h practical

What is Artificial Intelligence?

(And why doctors and biologists should care)

- 🧠 Artificial Intelligence (AI) simulates human reasoning and decision-making

- 🤖 Two historical approaches:

- Symbolic AI = rule-based logic (e.g., expert systems)

- Connectionist AI = inspired by the brain (neural networks)

- Symbolic AI = rule-based logic (e.g., expert systems)

- 📊 Statistical Learning forms the bridge:

- Data-driven techniques to learn patterns from data

- Includes regression, classification, and clustering

- Data-driven techniques to learn patterns from data

- 🧬 Why it matters in medicine:

- Many clinical tasks are decision problems under uncertainty

- From diagnosis to triage to interpretation of lab results

- AI = help, not replacement

- Many clinical tasks are decision problems under uncertainty

Why Do We Need a New AI in Medicine?

Limitations of classical statistical models

- 📊 Classical models (regression, decision trees, etc.) work well with structured, tabular data

- 🧬 But clinical data is increasingly unstructured and complex:

- Free-text reports, multi-language notes, EHRs, medical images

- Free-text reports, multi-language notes, EHRs, medical images

- ❌ Statistical models struggle with:

- Language ambiguity (e.g., “it” → “the liver”?)

- Missing or noisy data

- Context-dependent reasoning

- Language ambiguity (e.g., “it” → “the liver”?)

- 🧠 Modern AI (LLMs) can handle this complexity with better generalization on free-text and multimodal data

Why Do We Need a New AI in Medicine?

Limitations of classical statistical models

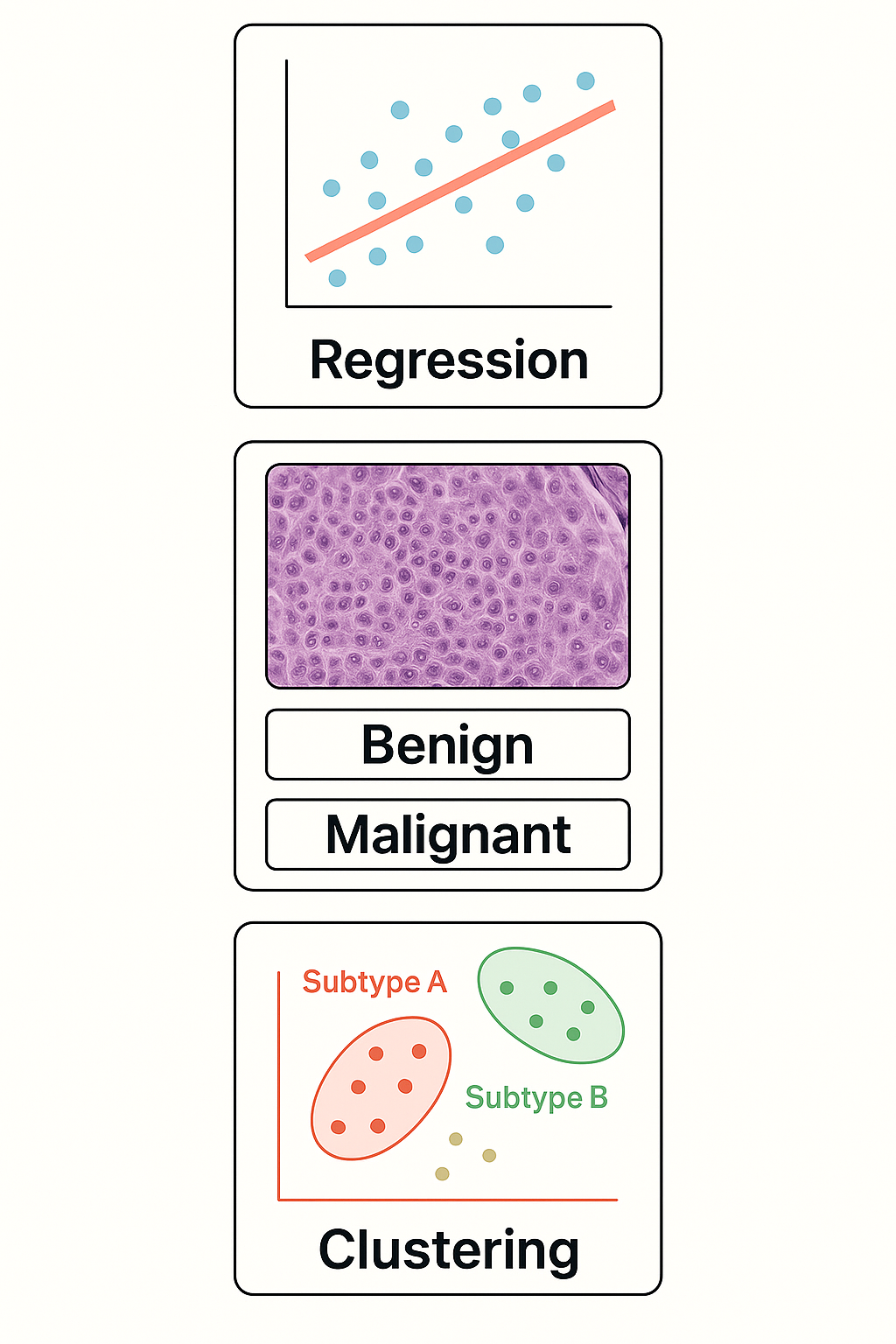

What Can Traditional AI Do?

Core Tasks in Statistical Learning

- 📈 Regression: Predict a number from input data

- Example: Predict blood glucose from age + BMI

- Example: Predict blood glucose from age + BMI

- 🧪 Classification: Assign a label to input data

- Example: “Is this biopsy malignant or benign?”

- Example: “Is this biopsy malignant or benign?”

- 🔍 Clustering: Find hidden groups in unlabeled data

- Example: Identify subtypes of patients with similar gene expression

Why Statistical Models Struggle with Language

From structured input to real-world complexity

- 🧱 Traditional models expect structured, tabular input

- 💬 Natural language is unstructured, ambiguous, context-dependent

- Example: “It is elevated” — what is “it”?

- 🧠 Language understanding needs:

- Context resolution (e.g., coreference)

- Syntax and semantics

- Long-range dependencies across sentences

- 📉 Statistical models lack memory or reasoning — they reduce text to bags of words or fixed vectors

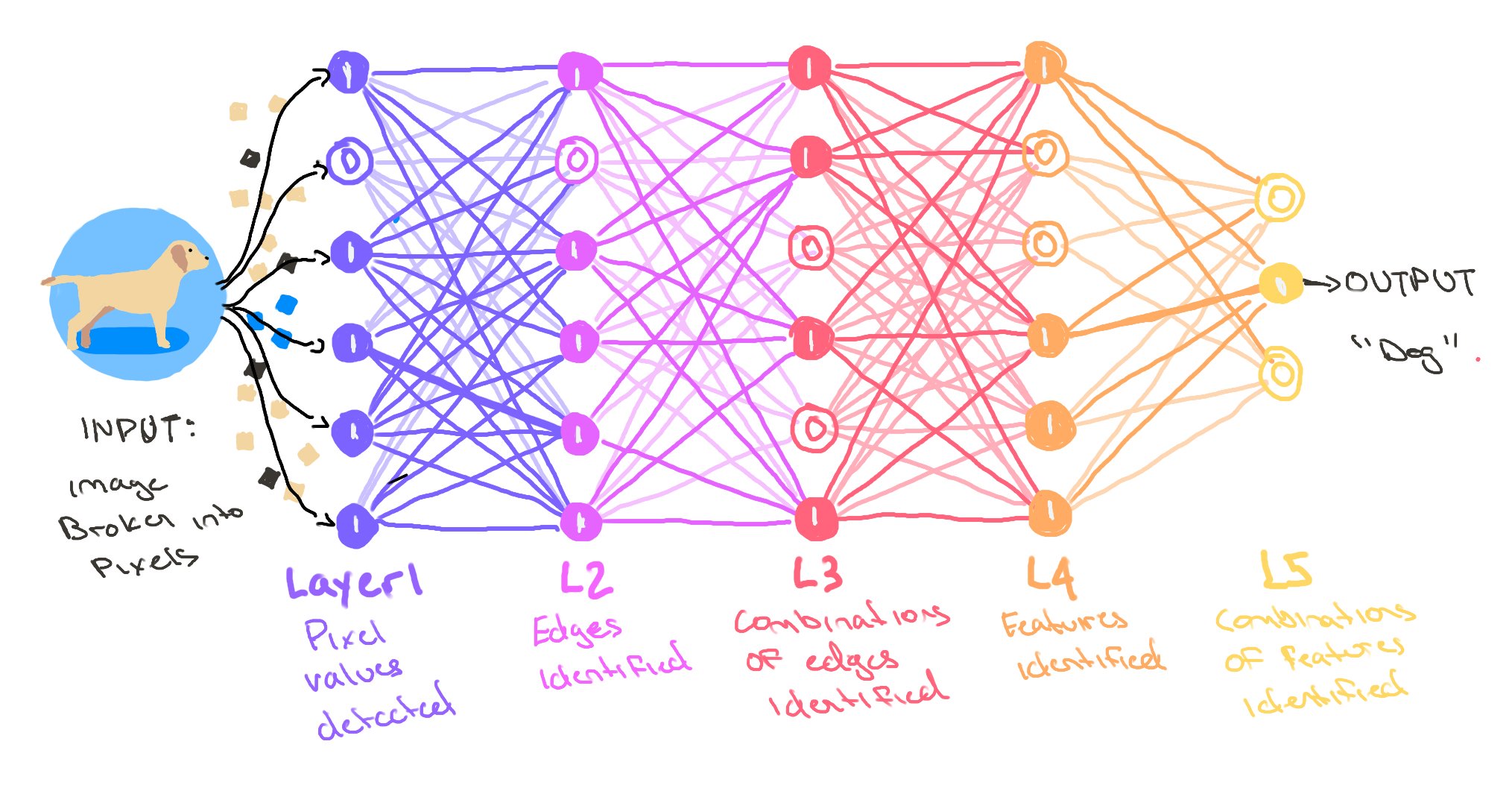

What Is a Neural Network?

From neurons to layers to learning

- 🔢 Neural networks are made of units (“neurons”) connected in layers

- 🧠 Each neuron takes input → does a small math operation → passes output forward

- 📚 By adjusting weights during training, the network learns patterns

- 🧬 This structure lets it capture non-linear relationships (vs classical regression)

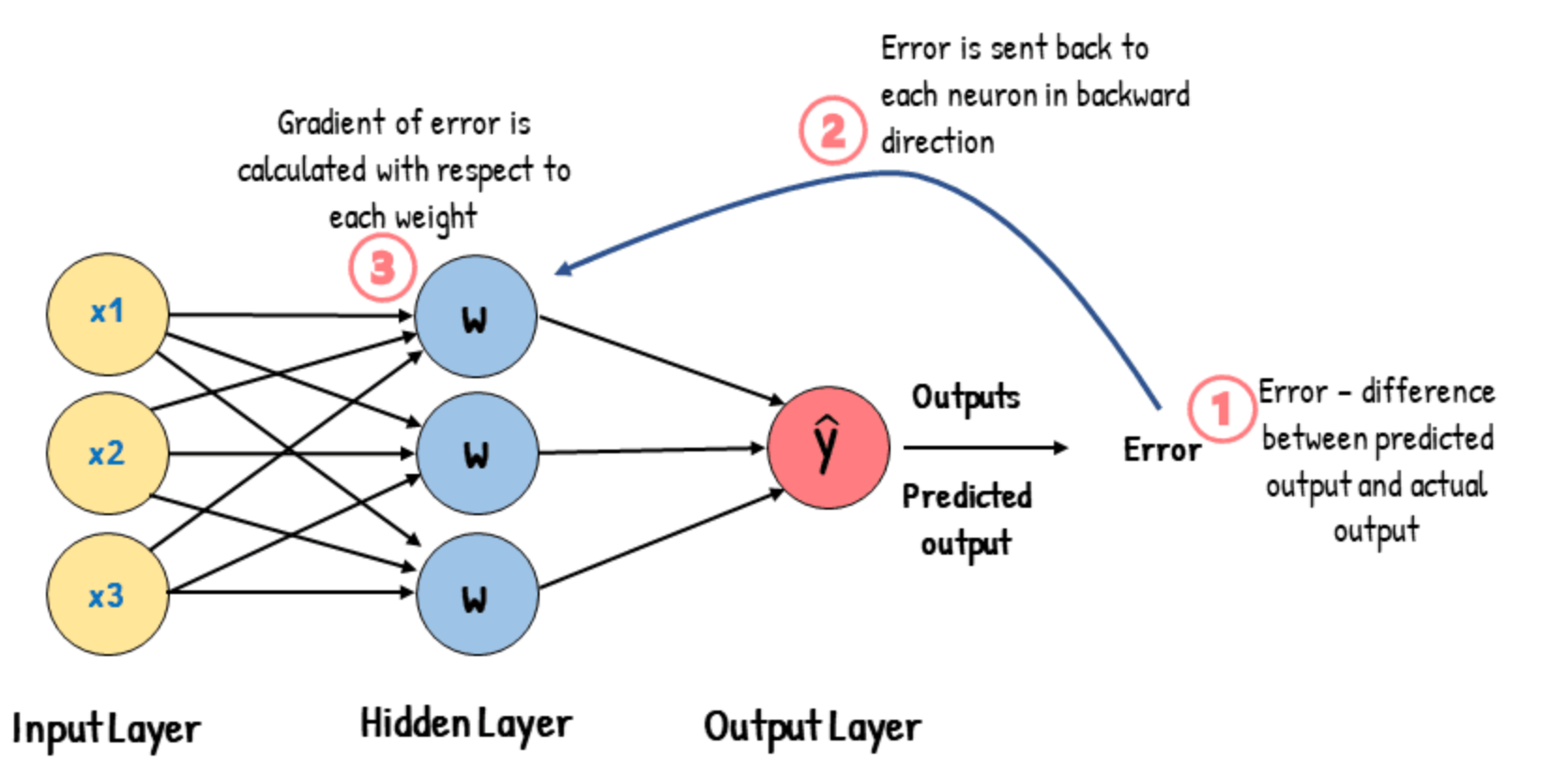

How Do Neural Networks Learn?

The role of loss and backpropagation

- 🧪 During training, the network makes a prediction

- 📉 A loss function compares the prediction to the true label

- 🔁 Backpropagation adjusts the weights to reduce error

- 🔄 This is repeated over many examples → the model learns patterns

Why Standard Neural Networks Struggle with Language

The need for sequential memory and attention

- 🔁 Standard networks treat inputs as fixed-length vectors

- 📜 But language is a sequence — word order and context matter

- 🧠 Early models like RNNs and LSTM were designed to process sequences

- They use memory to retain previous words

- They use memory to retain previous words

- 😕 Still limited: hard to capture long-range dependencies (“it” referring 3–4 sentences back)

From Deep Learning to LLMs

Why Transformers changed everything

- 🧠 Deep Learning = layered neural networks

- 🧱 Types of architectures:

- MLP: good for simple inputs

- CNN: excels in images and spatial data

- RNN: handles sequences like speech and text

- MLP: good for simple inputs

- ⚡ Transformer: breakthrough architecture for language

- Uses self-attention instead of recurrence

- Faster, more parallel, better at long sequences

- Uses self-attention instead of recurrence

- 🔁 Pretraining + Fine-tuning: strategy behind ChatGPT, Claude, Mistral

Inside the Transformer

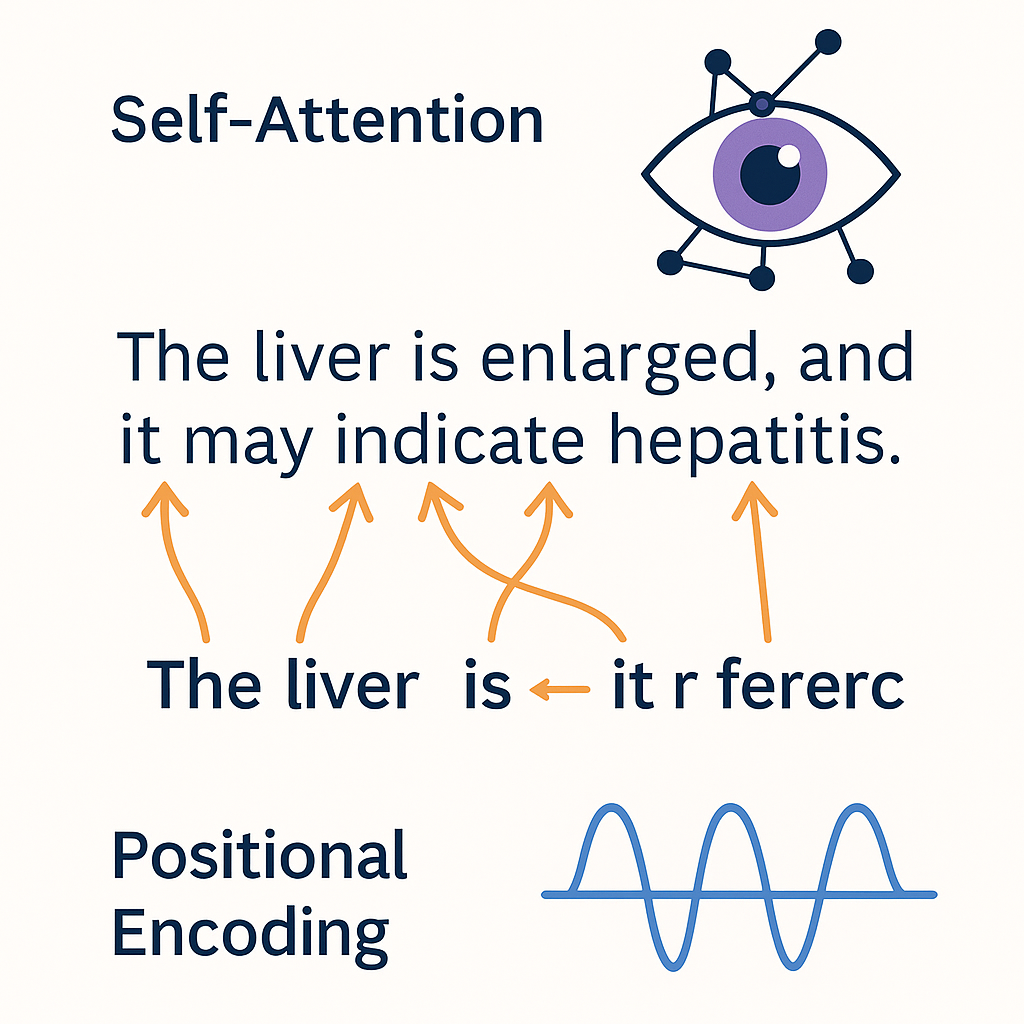

Self-Attention and Positional Encoding

- 👁️ Self-Attention: lets the model look at all words in a sentence at once

- Each word can “attend” to other words — capturing context and meaning

- Example: in “The liver is enlarged, and it may indicate…”, what does “it” refer to?

- Each word can “attend” to other words — capturing context and meaning

- 🧭 Positional Encoding: adds order to the sequence

- Transformers process input in parallel, so positions must be encoded explicitly

- Transformers process input in parallel, so positions must be encoded explicitly

- 🔍 These are what let LLMs handle long-range dependencies in language

- 🧠 Basis for ChatGPT, BERT, and every modern LLM

What is Self-Attention?

How Transformers “understand” language

- 👀 In self-attention, every word looks at all other words in the sentence

- Each word decides how much to pay attention to the others

- 🧠 Example:

- Sentence: “The liver is enlarged because it is inflamed”

- “it” should focus attention on “liver”, not “because” or “enlarged”

- 🔄 The model builds a map of relationships between words

- Helps resolve references, capture context, understand meaning

- ⚡ Self-attention is parallel and scales well to long texts

How Does Self-Attention Work?

Three steps: Query, Key, Value

- 📚 Each word is turned into three vectors:

- Query (Q): What am I looking for?

- Key (K): What do I offer?

- Value (V): What information do I carry?

- 🔍 Attention Score between two words:

- Multiply Query of one word × Key of another

- 🧮 Then, the scores are normalized and used to mix the Values

- 🎯 This gives each word a new, context-aware representation

Medical Examples of Self-Attention

How LLMs resolve ambiguity in clinical text

📋 Clinical Note Example: > “Patient presented with RUQ pain. Ultrasound showed a hypoechoic lesion. > CT confirmed it was a hemangioma. It measured 2.3 cm.”

🧠 Ambiguity Challenge: Which “it” refers to what?

- First “it” = the lesion (connecting back to previous sentence)

- Second “it” = the hemangioma (immediate previous reference)

👁️ Self-attention allows the model to create these connections automatically

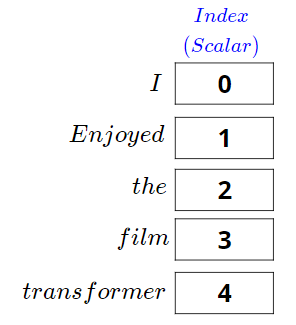

What is Positional Encoding?

How Transformers know the order of words

- 🧠 Transformers see all words together — but they need to know their order

- 🧭 Positional Encoding adds information about position to each word

- 🔢 Two ways to encode position:

- Add fixed patterns (e.g., sine and cosine functions)

- Or learn position embeddings during training

- 🧩 Without positional encoding:

- The model would treat sentences like unordered bags of words!

What are Word Embeddings?

Turning words into numbers

- 🔢 Computers need numbers, not text

- 🧠 Embedding = representing a word as a vector of numbers

- 📚 Similar words → similar vectors

- “liver” close to “kidney”, far from “car”

- ➡️ Embeddings capture meaning from large corpora

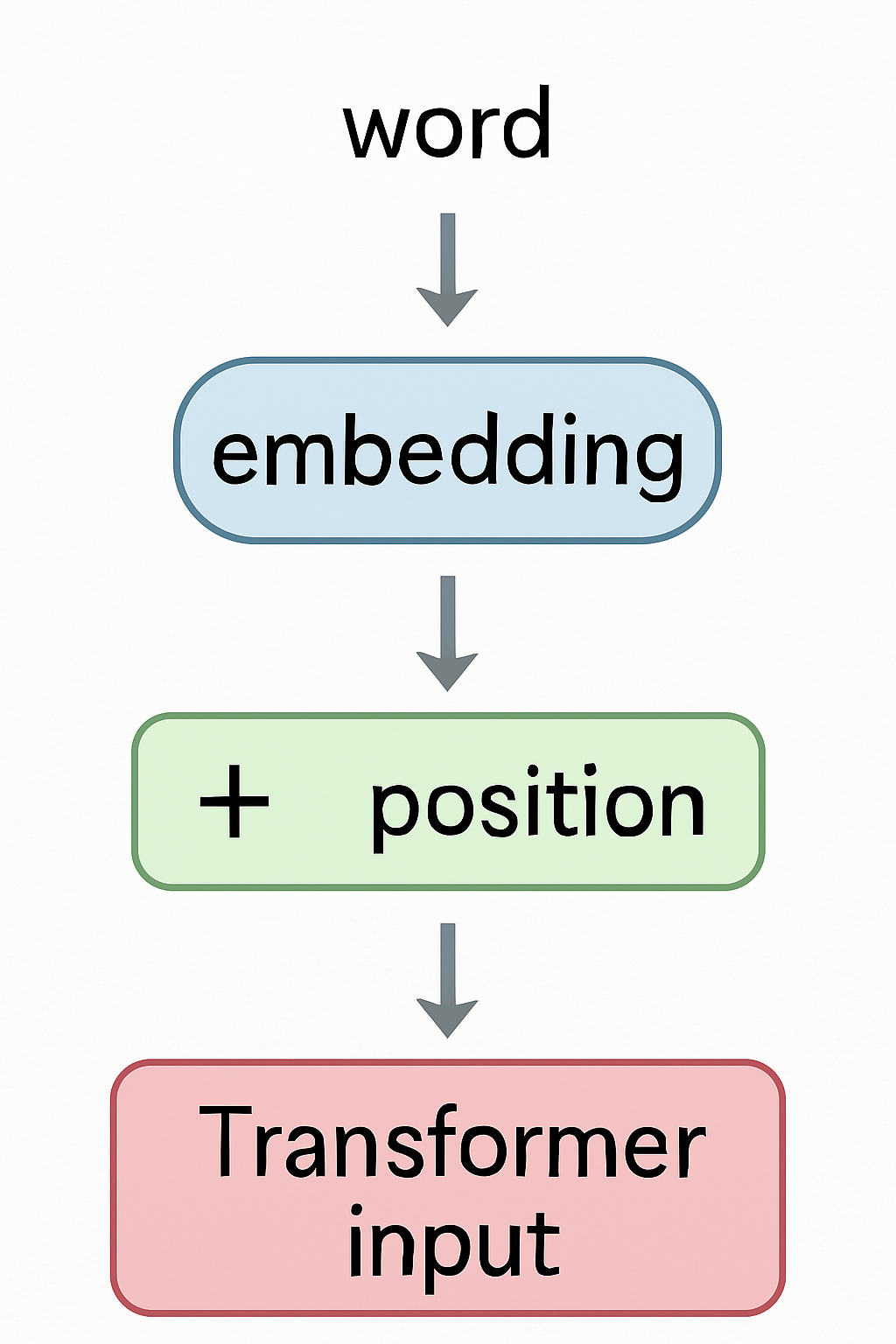

Embeddings in Transformers

First step before Attention

- 🏗️ Each input word is mapped to its embedding vector

- ➡️ Then positional encoding is added

- ⚡ The combined vector enters the Transformer layers

- 🎯 Embeddings are fine-tuned during training

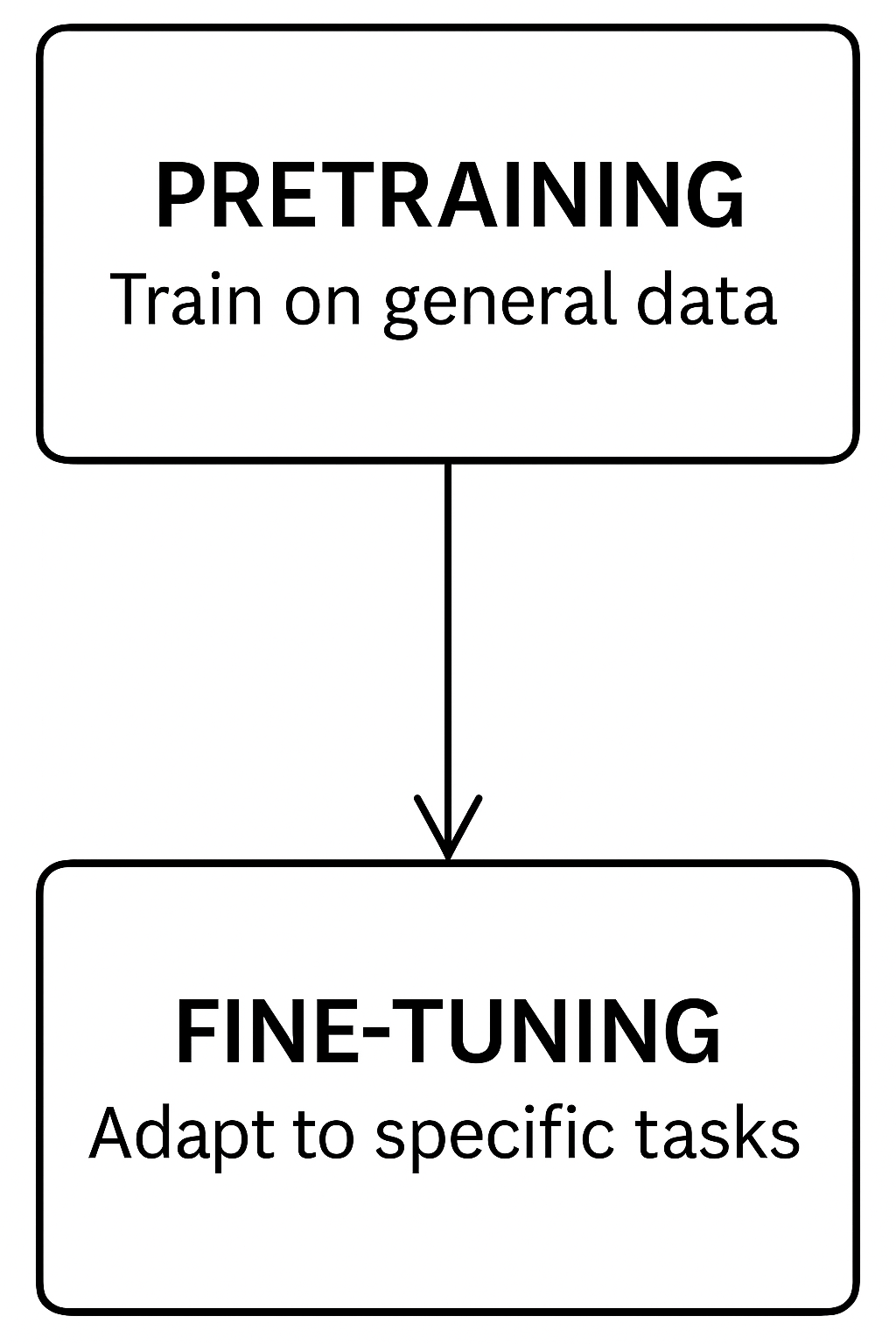

What is Pretraining?

Teaching a model general language skills

- 📚 Pretraining = training a model on huge text datasets

- 🧠 Goal: learn grammar, facts, reasoning patterns

- 🔄 No specific task — model predicts missing words or next words

- 🌍 Data sources: books, websites, medical papers, conversations

- 🎯 Result: a general-purpose model ready for adaptation

What is Fine-tuning?

Specializing the model for a specific task

- 🔧 Fine-tuning = additional training on specific datasets

- 🧪 Goal: adapt the model to medical, clinical, or lab tasks

- 🏥 Examples:

- Predict disease from symptoms

- Summarize lab results

- Generate medical reports

- 🎯 Result: a specialized model focused on a domain

Interpretability in LLMs

Why understanding model behavior matters

- 🔍 Interpretability = understanding how and why a model gives a response

- 🧠 Important in clinical and laboratory settings:

- Explain predictions and recommendations

- Build trust with users and patients

- ⚙️ Emerging techniques:

- Attention visualization

- Feature attribution (e.g., SHAP, LIME)

- Chain-of-thought prompting

- 🚨 Challenge: LLMs are complex and not fully transparent

Limitations of LLMs

Hallucinations and Algorithmic Bias

- 🎭 Hallucinations: model invents plausible but false information

- Danger: confident but wrong answers

- ⚖️ Algorithmic Bias: model reproduces biases from training data

- Risk: unfair outcomes for certain groups

- 🚑 In clinical use:

- Always require human validation

- Prefer domain-specific fine-tuning

Medical Examples of Hallucinations

When LLMs fabricate clinical information

🩺 Prompt: “What are the normal ranges for liver function tests?”

✅ Accurate responses:

- “ALT normal range: 7-56 U/L”

- “AST normal range: 8-48 U/L”

❌ Hallucinated responses:

- “GGT normal range: 15-30 U/L” (actual: 9-48 U/L)

- “Albumin: 3.5-6.0 g/dL” (actual: 3.5-5.0 g/dL)

- 🚨 Clinical dangers:

- False confidence in inaccurate values

- Reference ranges vary by lab/population

- Subtle errors harder to detect than obvious ones

LLMs in Clinical and Laboratory Medicine

Pros and Cons

| ✅ Advantages | ⚠️ Limitations |

|---|---|

| Fast information retrieval | Hallucinations: plausible but wrong answers |

| Assist in decision support | Algorithmic bias from training data |

| Summarize complex medical texts | Lack of full interpretability |

| Help generate reports and documentation | Risk of overconfidence in outputs |

| Available 24/7, scalable support | Dependence on human validation for safety |

LLMs: Pleasing You, Not Seeking Truth

Why plausible ≠ correct

- 🎭 LLMs are trained to sound convincing, not to tell the truth

- 🤝 Goal: produce answers that seem helpful, coherent, pleasant

- ❌ Risk: if unsure, the model guesses plausible but wrong facts

- 🚨 Danger in clinical settings: wrong information can look very credible

- 🧠 Always require critical review and human validation

⚠️ Warning: Plausibility is NOT Truth

Warning

- A fluent, confident answer can still be wrong.

- LLMs are rewarded for sounding helpful, not for being accurate.

- In clinical and laboratory applications: always validate before trusting.

Key Model Parameters in LLMs

How to control AI behavior

- 🌡️ Temperature: randomness level (higher = more creative, lower = more focused)

- 🎯 Top-p (nucleus sampling): limit choices to most probable words

- ✂️ Max tokens: maximum length of the output

- 🔁 Frequency penalty: discourage repeating the same words

- 🧠 Fine-tuning these helps adapt the model to clinical needs

Temperature Settings for Clinical Use

Finding the right balance between creativity and accuracy

- 🌡️ Temperature scale:

- 0.0-0.3: Most deterministic, consistent

- 0.4-0.7: Balanced creativity

- 0.8-1.0: Maximum creativity, unpredictability

- 🩺 Clinical recommendations:

- Patient documentation: 0.1-0.2

- Differential diagnosis: 0.3-0.5

- Patient education materials: 0.4-0.6

- Research brainstorming: 0.7-0.8

⚠️ Example impact:

Temperature 0.1: > “Elevated liver enzymes may indicate hepatocellular injury.”

Temperature 0.7: > “Elevated liver enzymes could suggest hepatocellular damage, biliary obstruction, medication effects, or various systemic conditions.”

What Are Tokens?

The building blocks of language models

- 🧩 Tokens = small pieces of text (words, parts of words, symbols)

- 📏 Models process text token by token, not character by character

- 🧮 1 word ≈ 1–3 tokens (depending on language and complexity)

- ✂️ Max tokens limits the total input + output length

- ⚡ Costs and speed often depend on the number of tokens used

Example: How Text Becomes Tokens

Real clinical sentence broken into tokens

📄 Sentence: > “Patient discharged in stable condition.”

🧩 Tokenization:

- “Patient”

- ” discharged”

- ” in”

- ” stable”

- ” condition”

- “.”

🔢 Total: 6 tokens

✅ Even short sentences can use multiple tokens!

How LLMs Process Images

Turning pictures into language

- 📸 Images are converted into numerical features (arrays of numbers)

- 🔎 Vision encoder extracts key elements: shapes, colors, objects, text

- 🧠 Features are interpreted by the language model

- 🖋️ Model generates descriptions, answers, or captions based on visual inputs

- ⚡ Clinical use: analyzing X-rays, MRIs, pathology slides, diagrams

Multimodal Applications in Medicine

Beyond text: LLMs with vision capabilities

- 🔬 Clinical applications:

- Describing radiological images

- Interpreting ECG patterns

- Analyzing microscopy slides

- Reading handwritten medical notes

- ⚡ Workflow examples:

- Upload image + add clinical question

- Model interprets visual + text context

- Response incorporates both modalities

🔍 Example prompt: > “This is a chest X-ray from a 65-year-old patient with shortness of breath. Describe what you see and any potential abnormalities.”

⚠️ Limitations:

- Not FDA-approved for diagnosis

- Variable performance across image types

- Requires clinical verification

General vs Medical-Specific Language Models

Choosing the right tool for clinical applications

- 🌍 General LLMs

Trained on vast internet data ➔ Versatile but shallow in medicine.

Examples: ChatGPT, Claude, Mistral.

- 🩺 Medical-specific LLMs

Trained on clinical records, guidelines, scientific papers ➔ Accurate but less flexible.

Examples: PathChat, BrainGPT, LiVersa.

Note

⚖️ Key trade-off:

Broad skills (general models) vs Deep expertise (medical models)

Specialty-Specific Applications

LLM use cases across medical disciplines

- 🫀 Cardiology:

- ECG interpretation assistance

- Heart failure management protocols

- 🧠 Neurology:

- Cognitive assessment documentation

- Seizure pattern description

- 🔬 Pathology:

- Standardized specimen reporting

- Literature search for rare findings

- 🩸 Laboratory Medicine:

- Test interpretation guidance

- Protocol documentation and standardization

- Complex test sequence planning

Chat Mode vs API Mode

Two ways to interact with LLMs

- 💬 Chat Mode:

➔ Interactive, no coding required.

➔ Best for brainstorming, exploration.

➔ ❗ Less control and reproducibility.

- 🔗 API Mode:

➔ Structured programmatic queries.

➔ Best for automation, scalability.

➔ ✅ Full control over outputs.

Note

⚡ Key Tip:

Use Chat Mode to explore.

Use API Mode to automate.

How Chat Mode Actually Works

The model re-reads everything every time

- 📚 Every new message = model re-reads all previous conversation

- 🔄 Chat history + new user message are sent again at every turn

- 📈 Cost and response time grow with chat length

- 🧠 Model does not have memory between sessions: only current context

Context Window: How Much an LLM Can Remember

Why token limits matter for conversations

- 🧠 Context window = maximum number of tokens model can process at once

- 📏 Includes both your prompt and the model’s reply

- 🚫 If the conversation exceeds the limit, old tokens are dropped (“forgetting”)

- 📉 Long chats may lose important earlier information

- 🔍 Practical tip: keep prompts concise, summarize when needed

Context Windows and How Much They Cover

Memory limits of major LLMs

| Model | Context Window | Approx. Pages |

|---|---|---|

| 🤖 GPT-3.5 | ~4,096 tokens | ~10 pages |

| 🧠 GPT-4 (standard) | ~8,192 tokens | ~20 pages |

| 🧠 GPT-4 (extended) | ~32,768 tokens | ~80 pages |

| 🤯 Claude 3 | ~200,000 tokens | ~500 pages |

| 🌟 Gemini 2.5 Pro | ~2,000,000 tokens | ~5,000 pages |

| 🧩 Mistral 7B | ~8,192 tokens | ~20 pages |

| 🦙 Llama 2 13B | ~4,096 tokens | ~10 pages |

What Happens When You Exceed the Context Window?

How LLMs handle too much information

- ⏳ When token limit is exceeded, oldest tokens are dropped

- 🧠 Model “forgets” early parts of the conversation

- 🚑 Critical instructions may be lost

- 📉 Performance and consistency degrade

- 🧹 Practical tip: summarize or restate key points periodically

Context Window Limitations in Clinical Practice

What happens when medical documents exceed token limits

📄 Typical discharge summary: 500-1000 words = ~750-1500 tokens

⚠️ Truncation risks:

- Previous medical history may be cut off

- Medication information at document end might be lost

- Follow-up instructions could be missing

💡 Clinical example: Patient summary with medication list at the end

- With 4K tokens: Complete information processed

- With 2K tokens: Critical anticoagulation instructions lost

Protecting Context in Long Conversations

Techniques to avoid critical information loss

- 🛡️ Anchor Instructions:

- Repeat critical rules or instructions every few prompts

- 📝 Summary Injection:

- Summarize key points and re-feed them during the chat

- 📚 Structured Prompting:

- Organize inputs clearly: diagnosis, treatments, follow-up

- 🚦 Short Sessions:

- Restart new chats after reaching 70–80% of the token limit

What are APIs?

Connecting to LLMs like professionals

- 🔗 API = Application Programming Interface

- 🛠️ A way to send questions and receive answers programmatically

- 📬 Works like “sending a message” to the model and getting a reply

- ⚡ Allows automation, scaling, and integration into clinical systems

- 🧠 No need to “chat” manually — workflows happen automatically

Quantitative Performance Evaluation

Measuring LLM effectiveness in clinical tasks

- 📊 Key metrics:

- Accuracy: Correctness of medical information

- Consistency: Reliable responses to similar queries

- Hallucination rate: Frequency of fabricated content

- Clinical relevance: Applicability to patient care

- 🔍 Evaluation methods:

- Expert review panels

- Comparison to gold standards

- Inter-model consistency checks

- Structured clinical scenarios

- 📈 Sample results comparison:

| Model | Accuracy | Hallucination Rate |

|---|---|---|

| GPT-4 | 89% | 4.5% |

| Claude 3 | 91% | 3.2% |

| Mistral | 85% | 6.7% |

| Med-PaLM | 93% | 2.8% |

Practical Example: Chat Mode

Clinical Prompt for Exploration

- 🧪 Scenario: drafting a discharge summary

- 💬 Prompt:

“Summarize the patient’s hospital stay focusing on diagnosis, treatment, and follow-up instructions.” - ⚡ Goal: fast text generation for clinician review

- ⚠️ Reminder: always validate for accuracy and clinical relevance

Practical Example: API Mode

Automating Clinical Workflows

- 🔗 Scenario: batch processing of lab reports

- 🛠️ API Call:

Send 100 lab report texts via API, receive 100 clinical summaries - ✅ Advantage: automation, reproducibility, efficiency

- ⚠️ Reminder: monitor outputs for consistency and medical soundness

Commercial vs Open-source LLMs

Comparing two worlds in clinical AI

- 🏢 Commercial models (e.g., ChatGPT, Claude, Gemini)

- Closed source, proprietary

- Strong performance, constant updates

- Privacy concerns, limited control

- 🧪 Open-source models (e.g., Llama, Mistral, Mixtral)

- Publicly available, customizable

- Greater flexibility and privacy

- Performance varies, requires local resources

- ⚖️ Trade-off: ease of use vs independence and control

Choosing Between Commercial and Open-source LLMs

Which is better for your clinical needs?

| 🏥 Scenario | 🚀 Recommended Approach |

|---|---|

| Rapid prototyping or brainstorming | Commercial model (easy access, strong performance) |

| Handling sensitive patient data | Open-source model (self-hosted, private) |

| Need for strong clinical language precision | Fine-tuned open-source model (customizable) |

| Limited local hardware/resources | Commercial model (cloud-based) |

| Full control over deployment and updates | Open-source model (independence) |

Local Deployment Security Considerations

Protecting patient data with on-premise LLMs

- 🔒 Security advantages:

- No data leaves institutional network

- Complete audit trail within organization

- No dependency on third-party privacy policies

- Compliance with data residency requirements

- ⚠️ Implementation challenges:

- Hardware requirements: GPU servers or clusters

- IT support and maintenance needs

- Model update and versioning management

- Performance limitations vs. cloud models

Note

Consider hybrid approaches: sensitive data on local models, non-PHI on cloud models

LM Studio & WebLLM: Local LLMs for Clinical Use

Run AI models privately and offline

- 🖥️ LM Studio:

- Desktop app for Windows, macOS, Linux

- Download and run open-source models locally

- Offers chat interface and API server

- Ideal for offline, privacy-sensitive tasks

- 🌐 WebLLM:

- Runs LLMs directly in your browser

- No installation or backend needed

- Powered by WebGPU for fast inference

- Great for lightweight, portable deployments

WebLLM: Running LLMs directly in your browser

A simple and private way to use AI locally

- 🌐 Runs directly in Chrome, Edge, Safari (no install)

- ⚡ Powered by WebGPU: fast local inference

- 🔒 No data leaves your computer

- 🛠️ Supports chat, document summarization, Q&A

- 🧠 Great for lightweight clinical tasks and experiments

How to Use WebLLM for Clinical Summaries

Simple steps to summarize clinical documents

- 🌐 Open WebLLM in your browser

- 📋 Copy and paste the clinical text into the chat

- 💬 Write this prompt:

“Summarize the key clinical information, focusing on:

- Primary diagnosis

- Treatments administered

- Follow-up instructions

- Patient condition at discharge.”

✅ The model will process the text locally and generate a summary!

Prompt Engineering for Clinical Applications

Techniques to improve accuracy and reliability

🔍 Chain-of-Thought Prompting: > “First analyze the lab values, then identify abnormalities, > then correlate with symptoms, and finally suggest possible diagnoses.”

📋 Few-Shot Examples: > “Example 1: Patient with [symptoms]… Diagnosis: [condition] > Now diagnose: Patient with fever, productive cough…”

🧩 Structured Output: > “Format your response as: Assessment: [text], Plan: [text], > Follow-up: [text], Patient Education: [text]”

Regulatory Considerations

Legal framework for AI in healthcare

- 🏛️ Key regulations:

- HIPAA (US): Protected Health Information requirements

- GDPR (EU): Special category data processing restrictions

- MDR (EU): AI as medical device classification

- ⚖️ Compliance challenges:

- Data residency requirements for processing PHI

- Right to explanation for AI-assisted decisions

- Audit trails for AI-generated content

Warning

Always check if your LLM usage requires: 1. Patient consent 2. Data processing agreements 3. Classification as a medical device

Ethical Documentation Requirements

Transparency in AI-assisted clinical notes

- 📝 Best practices:

- Disclose AI assistance in documentation

- Specify which parts were AI-generated

- Document human verification steps

- Maintain separation of AI suggestions and clinical judgment

- ✅ Example disclosure: > “This assessment summary was drafted using AI assistance and reviewed by Dr. Johnson for accuracy. All interpretations and medical decisions were independently verified.”

Liability Considerations

Managing risk when using LLMs in clinical settings

- ⚠️ Current legal landscape:

- No clear precedent for AI liability in healthcare

- Professional standards still evolving

- Default position: clinician bears ultimate responsibility

- 🛡️ Risk mitigation strategies:

- Document verification procedures for AI outputs

- Establish clear workflows for critical vs. non-critical uses

- Train staff on limitations and verification requirements

- Maintain awareness of model-specific limitations

Note

Consider consulting with risk management and legal counsel before implementing LLMs for clinical decision support.

Cost-Benefit Analysis of LLMs in Clinical Settings

ROI considerations for medical implementations

- 💰 Cost factors:

- API costs: $0.50-$20 per 1,000 clinical notes (model dependent)

- Staff time saved: 20-40% reduction in documentation time

- Training & implementation: 40-80 hours per department

- 📊 Real-world ROI examples:

- Hospital A: 50% reduction in discharge summary time (saving 15 min/patient)

- Clinic B: 30% increase in note completeness and quality

- Lab C: 70% faster protocol drafting for new tests

Note

ROI is typically reached within 3-6 months when focusing on high-volume documentation tasks.